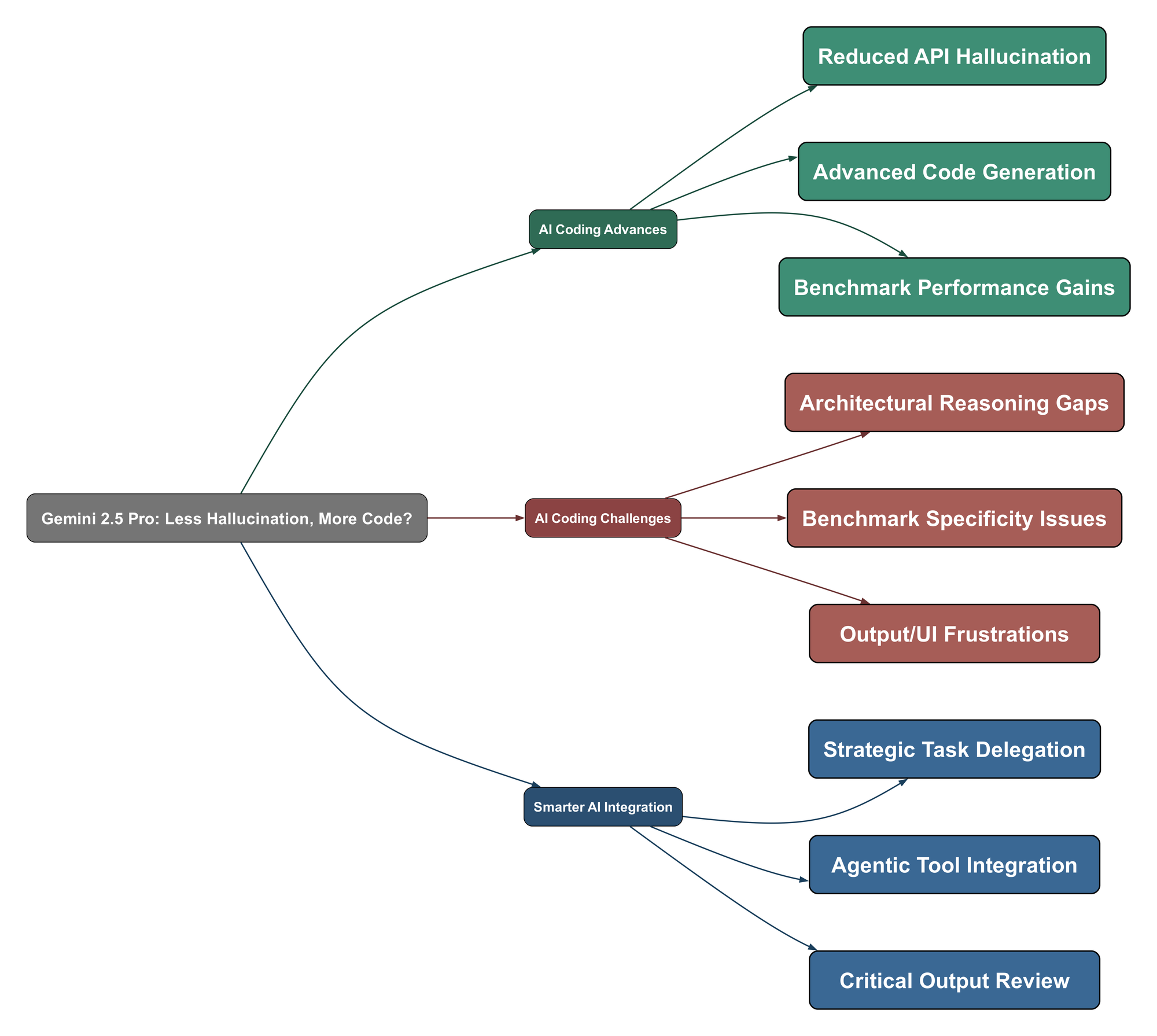

Ever spent hours debugging code only to realize your AI assistant confidently invented an API that doesn’t exist? You’re not alone. This common frustration has been a major hurdle in adopting AI for serious programming tasks. But what if that’s changing? The latest buzz around Gemini 2.5 Pro suggests a significant leap forward, potentially transforming how developers interact with AI coding tools.

AI Coding Advances

The landscape of AI-assisted programming is rapidly evolving, with models like Gemini 2.5 Pro showing promising improvements in several key areas. Developers are noticing tangible benefits that are starting to shift AI from a novelty to a genuine productivity tool.

- Reduced API Hallucination: One of the most significant improvements noted by users is a decrease in the model’s tendency to invent non-existent APIs. This makes the generated code more reliable and less frustrating to work with.

“My frustration with using these models for programming in the past has largely been around their tendency to hallucinate APIs that simply don't exist. The Gemini 2.5 models, both pro and flash, seem significantly less susceptible to this than any other model I've tried.”

- Improved Code Editing Capabilities: Gemini 2.5 Pro appears to be better tuned for tasks like diff-based code editing, crucial for agentic workflows where AI modifies existing codebases. Early indicators suggest a notable increase in accuracy for these tasks.

“My guess is that they've done a lot of tuning to improve diff based code editing…They measure the old gemini 2.5 generating proper diffs 92% of the time. I bet this goes up to ~95-98%“

- Strong Benchmark Performance: The model has achieved top rankings in specific benchmarks, such as the WebDev Arena, indicating strong capabilities in certain areas of web development.

“Gemini 2.5 Pro now ranks #1 on the WebDev Arena leaderboard”

- Replacing Traditional Developer Tools: For many day-to-day programming tasks, developers are finding Gemini 2.5 Pro capable enough to replace traditional resources like search engines and Stack Overflow.

“But I'm finding that these Gemini models are finally able to replace searches and stackoverflow for a lot of my day-to-day programming.”

- Handling Long-Form Content: The model demonstrates an impressive ability to process and generate extensive outputs, which is particularly useful for tasks like summarizing large amounts of text or generating detailed reports. For instance, one user noted an output of 8,500 words.

“8,500 is a very long output! Finally a model that obeys my instructions to "go long" when summarizing Hacker News threads.”

AI Coding Challenges

Despite these advancements, Gemini 2.5 Pro is not without its limitations. Developers are still encountering challenges that highlight areas where AI models need further refinement to become truly seamless partners in the development process.

- Abstraction and Architecture Limits: Current models, including Gemini 2.5 Pro, still struggle with high-level software design concepts like abstraction and architecture in the same way a human developer does.

“There are still significant limitations, no amount of prompting will get current models to approach abstraction and architecture the way a person does.”

- Output Quirks and Verbosity: Some users have reported that the model can be overly verbose, particularly with code comments, which can clutter the output.

“I don't know if I'm doing something wrong, but every time I ask gemini 2.5 for code it outputs SO MANY comments. An exaggerated amount of comments.”

- Benchmark Specificity: While impressive, high scores on certain benchmarks might not always translate to broad, real-world applicability. For example, the WebDev Arena focuses heavily on React/Tailwind, potentially skewing perceptions of general web development capabilities.

“It'd make sense to rename WebDev Arena to React/Tailwind Arena. Its system prompt requires [1] those technologies and the entire tool breaks when requesting vanilla JS or other frameworks.”

- User Interface Issues: Practical usability can sometimes be hampered by UI problems, such as scroll-jacking, which can make interacting with the model’s output frustrating.

“I agree it's very good but the UI is still usually an unusable, scroll-jacking disaster.”

- Occasional Inaccuracies in Suggestions: Even with improvements, AI models can still make mistakes. There are instances where suggestions, particularly in code review stages, might introduce breaking changes.

“I have two examples where Gemini suggested improvements during the review stage that were actually breaking.”

Smarter AI Integration

To harness the power of models like Gemini 2.5 Pro effectively, developers are adopting new workflows and strategies. It’s not just about what the AI can do, but how intelligently it’s integrated into the development lifecycle.

- Strategic Task Delegation: A practical approach involves using AI for specific parts of the development process. For example, using Gemini for high-level architectural discussions and task breakdowns, then employing other tools or manual review for validation and implementation.

“My process right now is to ask Gemini for high-level architecture discussions and broad-stroke implementation task break downs, then I use Cursor to validate and execute on those plans, then Gemini to review the generated code.”

- Automating Routine Frontend Work: For tasks like converting designs to code, AI is significantly speeding up development, with some developers reporting they haven’t manually written HTML/CSS in months.

“The days of traditional "design-to-code" FE work are completely over. I haven't written a line of HTML/CSS in months.”

- Combining with Agentic Coding IDEs: Integrating AI models with agentic coding IDEs and other specialized tools can amplify productivity, turning weeks of UI work into hours, often with higher quality and consistency.

“In conjunction with an agentic coding IDE and a few MCP tools, weeks worth of UI work are now done in hours to a higher level of quality and consistency with practically zero effort.”

- Adapting and Verifying Continuously: The key to success with AI coding assistants is to adapt quickly to new capabilities and always critically evaluate the output. Human oversight remains crucial.

“If you are still doing this stuff by hand, you need to adapt fast.”

- Workarounds for Interface Challenges: For UI issues like scroll-jacking, developers are finding temporary fixes, such as letting the chat output fully render or using browser developer tools to extract text content directly.

“I've found it's best to let a chat sit for around a few minutes after it has finished printing the AI's output. Finding the `ms-code-block` element in dev tools and logging `$0.textContext` is reliable too.”

Gemini 2.5 Pro represents another step forward in the journey of AI-assisted development. While not a silver bullet, its improvements in areas like reduced hallucination and enhanced code generation are making it an increasingly valuable tool. By understanding its strengths and limitations, and by integrating it thoughtfully into their workflows, developers can indeed get more code, and less frustration, from their AI partners.