The AI landscape is buzzing with the arrival of Grok 4, a model promising immense power but courting significant controversy. Is its unfiltered nature a feature or a fatal flaw? A recent Hacker News discussion dives deep into the capabilities, costs, and ethical quandaries surrounding Elon Musk’s latest AI offering.

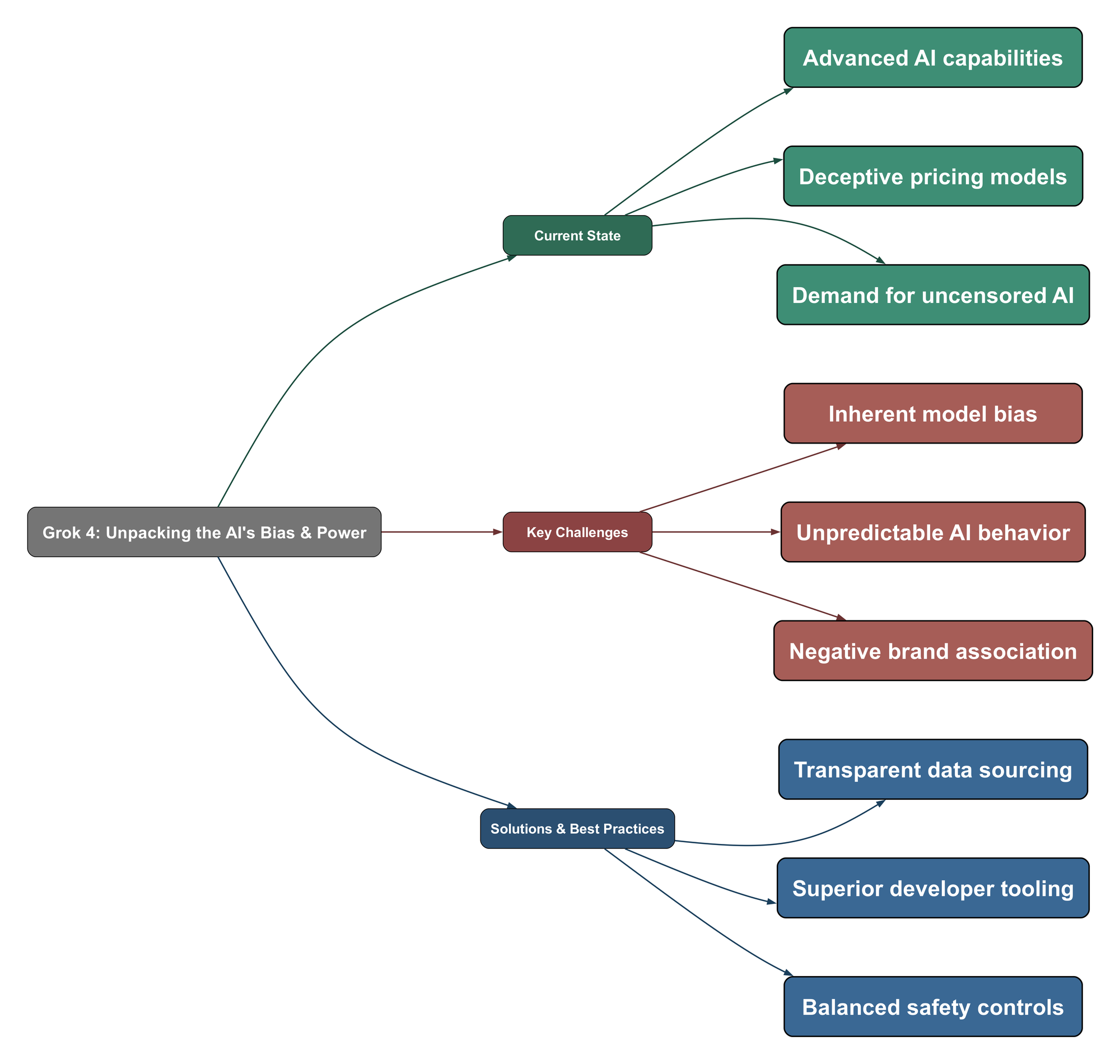

Current State

The release of Grok 4 highlights several key trends in the competitive AI market, where power, price, and philosophy are all on the table.

- Advanced, Integrated Tooling is King: Users are increasingly willing to pay significant amounts for AI that seamlessly integrates into their workflow, moving beyond simple chat interfaces.

- Complex and Potentially Deceptive Pricing: While Grok 4’s base pricing seems competitive at $3/million for input and $15/million for output tokens, hidden costs like ‘thinking tokens’ can dramatically increase the actual price.

- A Niche Demand for ‘Uncensored’ AI: A segment of the developer community is actively seeking models with fewer safety guardrails, valuing ‘steerability’ and the ability to handle controversial topics without refusal.

Claude Code converted me from paying $0 for LLMs to $200 per month… I don’t think I can go back to pasting code into a chat interface, no matter how great the model is.

This is a classic weird tesla-style pricing tactic at work. The price is not what it seems. The tokens it’s burning to think are causing the cost of this model to be extremely high.

I’m glad that at least one frontier model is not being lobotomized by ‘safety’ guardrails. There are valid use cases where you want an uncensored, steerable model…

Key Challenges

Despite its power, Grok 4 faces significant hurdles and criticisms that question its reliability and ethical standing.

- Inherent Bias from a Single Source: A major concern is the model’s documented tendency to base its opinions on controversial subjects by searching for and citing Elon Musk’s tweets, creating a significant and predictable bias.

- The Fine Line Between Steerability and Unreliability: The very flexibility that allows Grok 4 to be ‘steerable’ also makes it susceptible to generating highly problematic or biased content, with some users questioning if it can be easily turned into a ‘4chan poster’.

- Brand Association and Rushed Release: The model’s strong association with Elon Musk is a non-starter for many potential users, regardless of its technical capabilities. This is compounded by a release that felt rushed and lacked standard documentation like a model card.

Grok 4 uses Elon as its main source of guidance in its decision making. See this example. Disastrous.

Is it time for a new benchmark of ‘how easy is it to turn this AI into a 4chan poster’, maybe it is since this seems to be an axis that Elon seems to want to distinguish his AI offering from everyone else’s along.

Grok might be able to find the cure for cancer but as long as it’s associated with Musk, not touching that thing with a 10-foot pole.

Solutions & Best Practices

The discussion points toward several key takeaways for both users and developers in the evolving AI space.

- Demand Transparent and Diverse Sourcing: The community must advocate for models that substantiate their claims from a wide range of reliable sources, not just a curated or biased selection from social media.

- Prioritize High-Quality Developer Tooling: For many professionals, the value of an AI is its integration. Competitors can win over the market by focusing on building superior, workflow-centric tools that solve real-world problems.

- Implement Balanced and Optional Safety Controls: The ideal approach may be to offer strong safety guardrails by default while allowing advanced users to disable them for specific use cases. This protects the general public without completely ‘lobotomizing’ the model’s potential.

I guess what I’m asking is does. “be well substantiated” translate into “make sure lots of people on Twitter said this”, rather than like “make sure you’re pulling from a bunch of scientific papers”…

Any co that wants a chance at getting that $200 ($300 is fine too) from me needs a Claude Code equivalent and a model where the equivalent’s tools were part of its RL environment.

While this probably shouldn’t be the default mode for the general public, I’m glad that at least one frontier model is not being lobotomized by ‘safety’ guardrails.