Large Language Models (LLMs) are rapidly transforming the software development landscape, promising unprecedented gains in productivity. But how effective are they truly, and what are the real-world experiences of developers integrating tools like Claude Code into their daily workflows? A recent Hacker News discussion dives deep into these questions, revealing both profound successes and frustrating challenges.

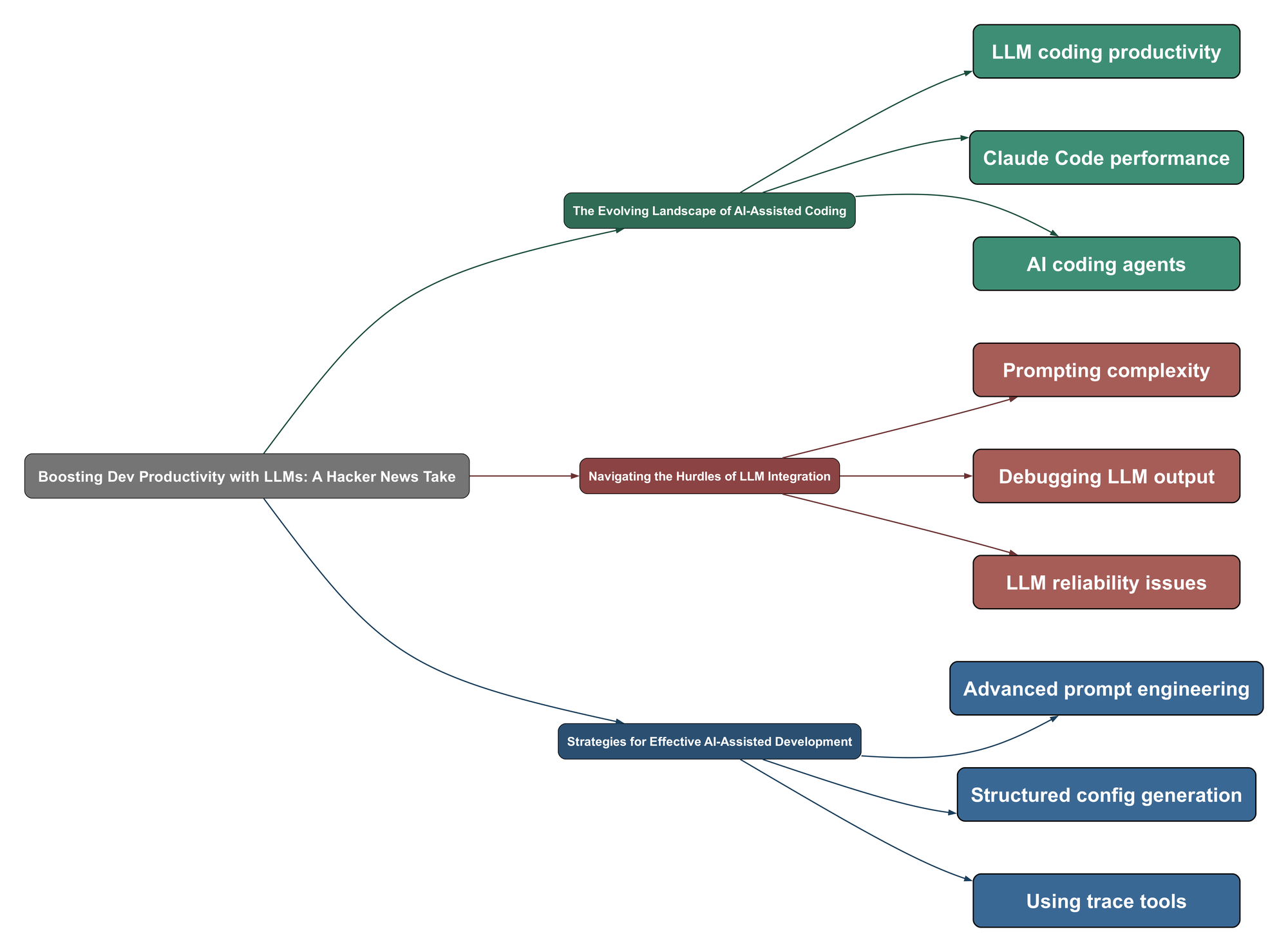

The Evolving Landscape of AI-Assisted Coding

- Many developers report significant productivity boosts, leveraging LLMs for a wide range of tasks from initial MVP creation to ongoing maintenance.

- Claude Code is frequently highlighted for its strong performance in real-world coding scenarios, often outperforming competitors in practical applications.

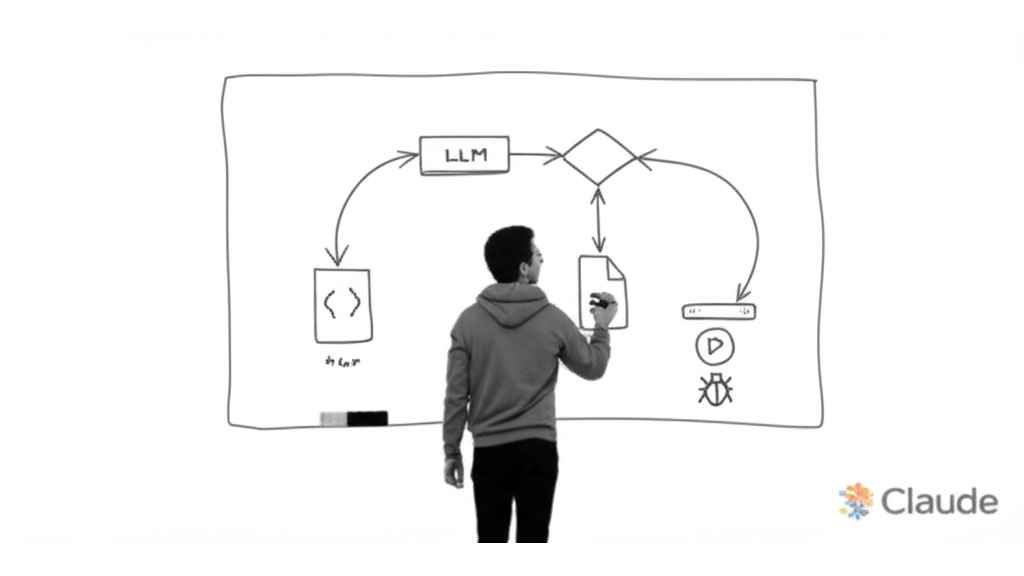

- LLMs are becoming integral for critical development functions, including security vulnerability fixes, test-driven development, and software architecture planning.

- There’s growing interest in developing specialized coding agents, particularly for generating structured configurations rather than raw code.

It shocks me when people say that LLMs don’t make them more productive, because my experience has been the complete opposite, especially with Claude Code.

I’ve literally built the entire MVP of my startup on Claude Code and now have paying customers.

Claude outperforms OpenAI’s and Google’s models by such a large margin that the others are functionally useless in comparison.

Navigating the Hurdles of LLM Integration

- A significant challenge lies in the effective utilization of LLMs, suggesting a skill gap in prompt engineering among some users.

- Developers encounter frustrating limitations, such as LLMs failing on complex debugging tasks, producing errors, or getting stuck in loops.

- Progress can stall, with LLMs making “half-baked changes” or failing to fix regressions, leading to developer frustration during refactoring or optimization.

- Concerns exist regarding the long-term reliability and corporate practices of LLM providers, including product longevity and potential censorship.

Either I’m worse than then at programming, to the point that I find an LLM useful and they don’t, or they don’t know how to use LLMs for coding.

Oof, this comes at a hard moment in my Claude Code usage. I’m trying to have it help me debug some Elastic issues… after a few minutes it spits out a zillion lines of obfuscated JS and says: Error: kill EPERM

lately have noticed progress stalling. I’m in the middle of some refactoring/bug fixing/optimization but it’s constantly running into issues, making half baked changes, not able to fix regressions etc.

What do people think of Google’s Gemini (Pro?) compared to Claude for code? I really like a lot of what Google produces, but they can’t seem to keep a product that they don’t shut down

Strategies for Effective AI-Assisted Development

- Master Prompt Engineering: Crafting detailed, context-rich prompts is crucial for guiding LLMs effectively, including explaining tool usage and user intent.

- Break Down Complex Problems: For challenging tasks like refactoring or debugging, breaking them into smaller, manageable chunks can improve LLM performance and reduce errors.

- Prioritize Structured Output: Generating structured configurations (like YAML/JSON) over raw, complex code can lead to more reliable, easier-to-validate, and debuggable outputs.

- Utilize Trace and Debugging Tools: Employing tools like Claude Trace helps developers understand the LLM’s internal workings, prompts, and tool calls, aiding in debugging and optimization.

Long prompts are good – and don’t forget basic things like explaining in the prompt what the tool is, how to help the user, etc

If anyone has pointers, I’m all ears!! Might have to break it into smaller chunks or something.

My theory is that agents producing valid YAML/JSON schemas could be more reliable than code generation. The output is constrained, easier to validate, and when it breaks, you can actually debug it.

If you are really interested in how it works, I strongly recommend looking at Claude Trace: It dumps out a JSON file as well as a very nicely formatted HTML file that shows you every single tool and all the prompts that were used for a session.