The pursuit of Artificial General Intelligence (AGI) continues to captivate the tech world, fueled by the rapid advancements in large language models (LLMs). But as these powerful systems grow, a critical debate emerges: is AGI merely an engineering challenge requiring more data and bigger models, or is something more fundamental missing from our current approach?

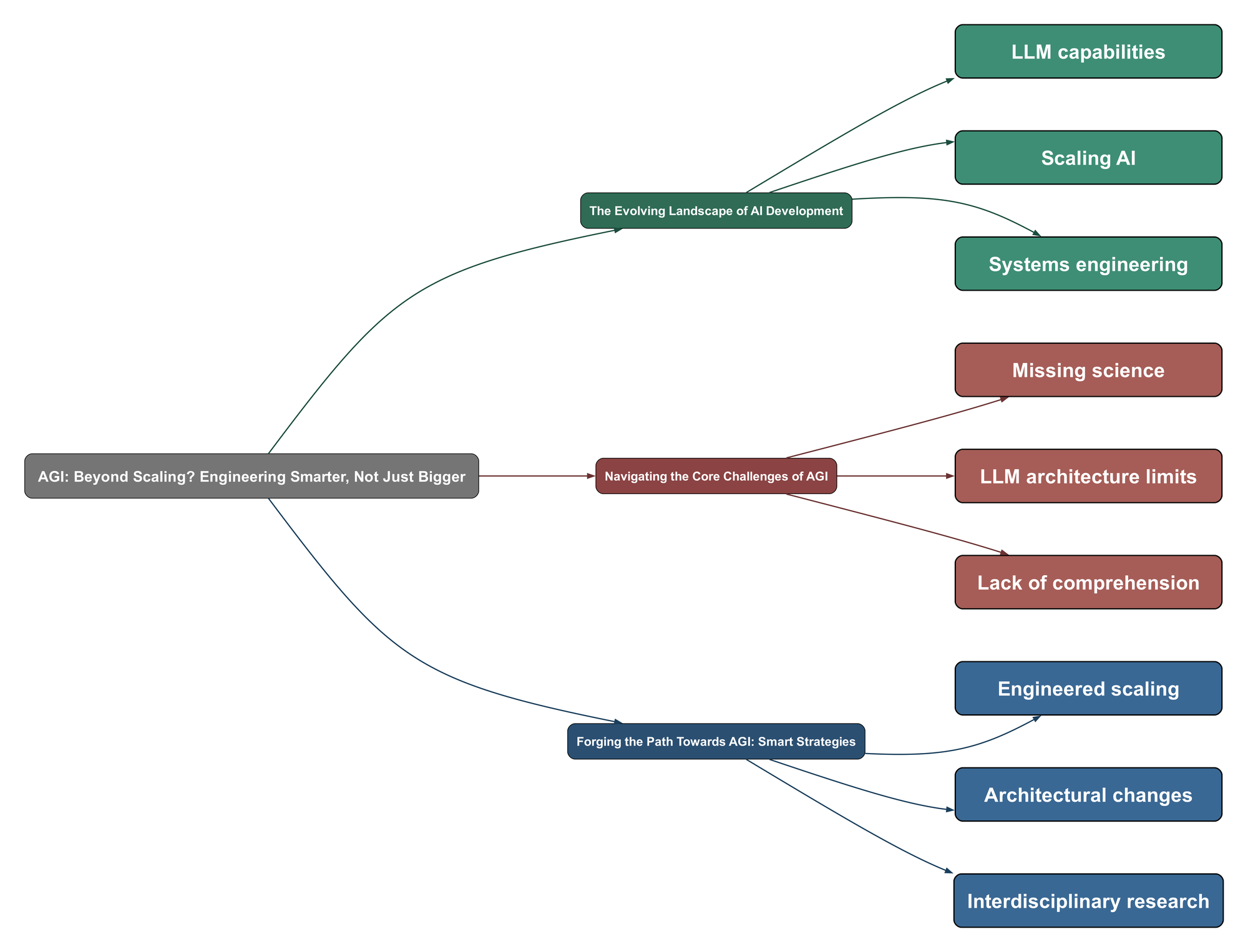

The Evolving Landscape of AI Development

- LLMs’ Remarkable Capabilities: Current LLMs like GPT, Claude, and Gemini have demonstrated astonishing abilities, leading some to believe we’re on the cusp of AGI.

Am I the only one who feels that Claude Code is what they would have imagined basic AGI to be like 10 years ago?

- The "Bitter Lesson" Persists: History shows that brute-force scaling, combined with general methods, often leads to surprising leaps in capability. This principle has guided much of LLM development.

If you believe the bitter lesson, all the handwavy "engineering" is better done with more data.

- Emergence of Systems Thinking: Beyond raw model size, there’s a growing recognition of the need for "systems thinking," with early examples like tool-augmented agents and memory-augmented frameworks starting to appear. These hint at a future where orchestration is key.

You can already see the beginnings of "systems thinking" in products like Claude Code, tool-augmented agents, and memory-augmented frameworks.

- Enhanced User Intent Understanding: A significant contribution of LLMs is their ability to better understand user intent from natural language, a crucial step for human-computer interaction.

Arguably the only building block that LLMs have contributed is that we have better user intent understanding now; a computer can just read text and extract intent from it much better than before.

Navigating the Core Challenges of AGI

- Missing Fundamental Science: Many argue that AGI isn’t just an engineering problem but requires a breakthrough in fundamental science. LLMs, despite their scale, may not possess the architectural properties for true general intelligence.

There is something more fundamental missing, as in missing science, not missing engineering.

- Biological vs. Artificial Constructs: A critical unknown is whether AGI is even possible outside of a biological construct, given the complex, continuous, and motivated nature of human intelligence.

We don’t know if AGI is even possible outside of a biological construct yet. This is key.

- Architectural Limitations of LLMs: The current architecture of LLMs, designed for gradient descent training, inherently limits their ability to perform complex, branching reasoning or truly "think" in a human-like way, especially when thoughts aren’t language-based.

The architecture has to allow for gradient descent to be a viable training strategy, this means no branching (routing is bolted on).

- Lack of Comprehension and Motivation: True AGI demands "artificial comprehension" – a dynamic, ongoing validation of observations and self-developed understanding, coupled with intrinsic motivation, which current systems lack.

This AGI would be something different, this is understanding, comprehending.

Forging the Path Towards AGI: Smart Strategies

- "Bigger + Engineered Smarter": The path forward likely involves combining the power of scaling with sophisticated engineering. This means developing robust scaffolding, reliable systems, and composable architectures around powerful models.

So maybe the real path forward is not "bigger vs. smarter," but bigger + engineered smarter.

- Fundamental Architectural Shifts: Achieving AGI may necessitate entirely new architectural paradigms that move beyond the static, autoregressive nature of current LLMs, allowing for continuous feedback and dynamic context management.

I think AGI will likely involve an architectural change to how models work.

- Interdisciplinary Foundations: A deeper understanding of neuroscience and psychology, focusing on the first principles of human intelligence, motivation, and consciousness, is crucial for designing truly general systems.

We have to account for first principals in how intelligence works, starting from the origin of ideas and how humans process their ideas in novel ways.

- Prioritizing "Comprehension": Future AI development should focus on building capabilities for "artificial comprehension" – systems that can dynamically assess plausibility, validate observations, and self-develop understanding, rather than just pattern-matching.

That would be artificial comprehension, something we’ve not even scratched.

The journey to AGI is clearly more nuanced than simply scaling up existing models. It demands a holistic approach, integrating advanced engineering with fundamental scientific breakthroughs and a deeper understanding of intelligence itself. The debate continues, but the consensus points towards a future where intelligent design and foundational insights will be as critical as computational power.