Eh, you all heard the latest? AI is getting smarter, faster, and cheaper. But can these new LLMs like Gemini and OpenAI’s O3-Mini really help us with our daily grind here in Singapore? Forget just coding – we’re talking about real-world applications. Can they help us sort out our CPF investments, plan our next HDB renovation, or even just write a better email to your boss? Let’s see what the buzz is about and whether these tech advancements are worth the hype, or just another case of ‘kiasu’ tech?

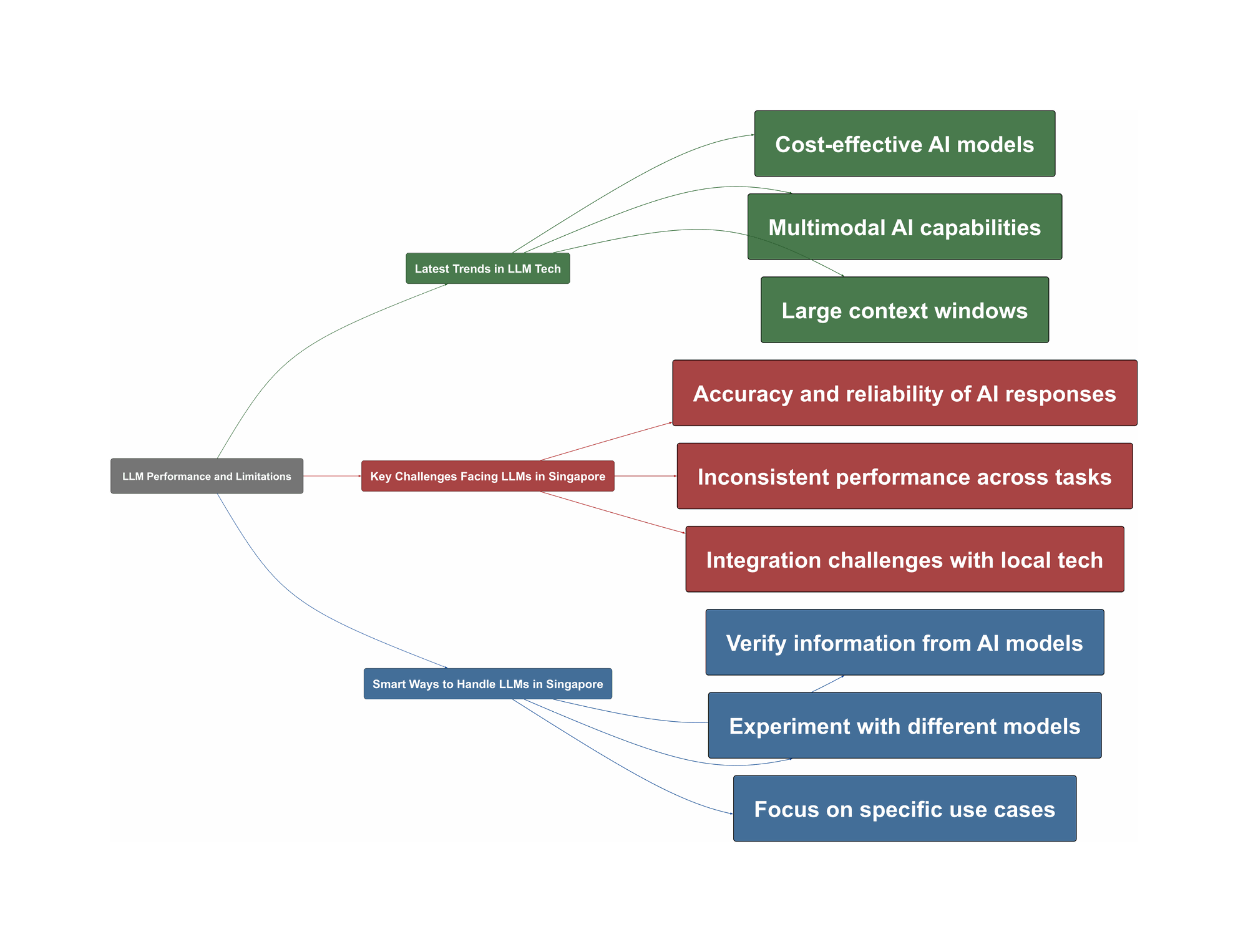

Latest Trends in LLM Tech

The AI scene is moving faster than a Grab during peak hour. Here’s what’s hot:

- Cost-Effective Models: OpenAI’s O3-Mini is making waves, with some saying it’s up to 10x cheaper than its predecessor, o1.

“o3-mini is significantly cheaper than o1, with some users reporting a 10x cost reduction”

- Multimodal Capabilities: Gemini 2.0 Flash is showing off its skills in image and document processing, a big plus for Singaporean businesses dealing with lots of paperwork.

- Expanded Context Windows: Gemini’s 2 million token context window is like having a super-long memory, helping the AI understand complex topics without needing to be broken down.

“The large context window (up to 2 million tokens) is seen as a major advantage, potentially reducing the need for Retrieval-Augmented Generation (RAG) in some cases.”

Key Challenges Facing LLMs in Singapore

So, it all sounds great, but got any problems, or what? Here’s the ‘kiam siap’ side of things:

- Accuracy Concerns: Some of these AI models are giving out ‘chinchai’ answers, especially when it comes to research. This is a big problem if you’re trying to make important decisions.

“Multiple users report instances where the tool generated factually incorrect information, fabricated details, or confused sources.”

- Inconsistent Performance: Even with the latest models, the results can be all over the place. One minute, it’s acing a coding challenge; the next, it’s struggling with a simple request.

- Integration Issues: Integrating these tools into existing Singaporean tech infrastructure can be a headache, especially if you’re relying on local UI component libraries.

“Others report issues with consistency and adherence to instructions, particularly when used with tools like Cursor, where it struggles to consistently use specified UI component libraries.”

- Bias and Limitations: Some models show bias or struggle with local context. One user reported Gemini’s refusal to discuss a chili recipe because it mentioned Obama.

Smart Ways to Handle LLMs in Singapore

Don’t worry, there’s hope. Here’s how to navigate the LLM landscape:

- Verify Everything: Always double-check the information the AI gives you, especially for sensitive topics like finance or healthcare. Don’t just ‘trust, but verify’, okay?

- Experiment and Test: Try out different models to see which one works best for your needs. Don’t be afraid to ‘play play’ and see what they can do.

- Use it for Specific Tasks: Instead of relying on AI for everything, focus on using it for specific tasks where it shines, like summarization or generating drafts.

- Consider Singapore-Specific Data: If you’re training your own models, make sure to incorporate Singaporean data to improve accuracy and relevance.

So, are these new LLMs going to solve all our problems? Not quite. But with a bit of ‘kiasi’ attitude and smart implementation, they can definitely make our lives a little easier. Now, go forth and explore – and don’t forget to share your experiences!