Singapore’s tech scene is buzzing with AI, and local developers are constantly seeking the best tools. We’re seeing more adoption of AI-powered coding assistants, from startups to government initiatives like the Smart Nation project. This post dives into the performance and usage of two prominent models, DeepSeek R1 and OpenAI’s o3-mini, based on recent Hacker News discussions, and what it means for us here in Singapore.

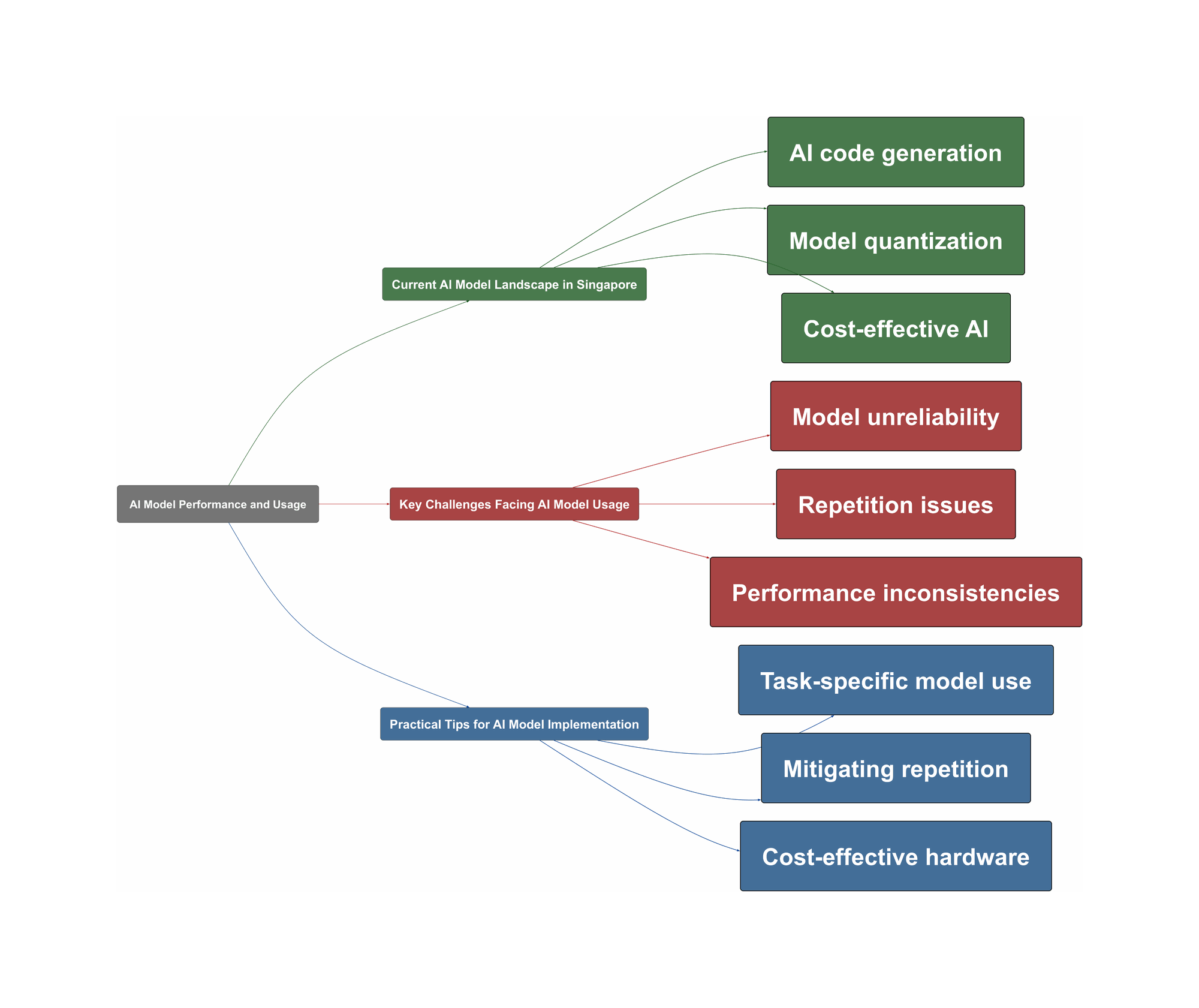

Current AI Model Landscape in Singapore

- DeepSeek R1 is making waves for its code generation capabilities. A pull request on llama.cpp showed 99% of the code was AI-generated.

- Local developers are integrating these models into their workflows, with one user reporting 70% of their project’s new code being AI-written.

- OpenAI’s o3-mini is emerging as a cost-effective alternative, with reports of 10x cost reduction compared to o1 for similar coding performance.

- Singaporean developers are experimenting with running these models on various hardware, from laptops to high-end GPUs, as local compute resources evolve.

- There’s a growing interest in open-source models to avoid vendor lock-in, which aligns with the spirit of Singapore’s tech ecosystem.

Key Challenges Facing AI Model Usage

- DeepSeek R1, while powerful, can be unreliable in identifying errors, sometimes even introducing them and ‘gaslighting’ the user.

- The 1.58-bit quantized version of DeepSeek R1 suffers from repetition issues, impacting the practical use of the model, especially for real-time applications.

- o3-mini, while cheaper, has inconsistencies in performance across benchmarks and can struggle with complex instruction following, especially in coding contexts.

- There is a lack of standardized benchmarks for evaluating these models, making it difficult for Singaporean developers to assess their suitability for specific tasks.

- Hardware constraints and the high cost of powerful GPUs pose challenges for smaller teams and individual developers in Singapore, limiting the accessibility of these models.

“I’ve had some very negative experiences with DeepSeek R1 where it just introduces errors and then gaslights me about the original code.”

Practical Tips for AI Model Implementation

- For DeepSeek R1, focus on algorithm-heavy tasks where its strengths lie, but carefully review its code for potential errors. Consider using it for refactoring rather than generating complex new code from scratch.

- When using the quantized version of DeepSeek R1, implement techniques like adjusting the KV cache or using min_p to mitigate repetition issues.

- For o3-mini, leverage its ‘reasoning_effort’ setting to tailor performance based on specific tasks. Use it for code conversion and summarization where it excels.

- For a more cost-effective solution, explore using older GPUs or cloud instances, especially for running quantized models.

- Engage with local developer communities to share your experiences and learn from others facing similar challenges. Collaboration is key to navigating this rapidly evolving space.

“Unsloth’s Danielhanchen is a god for getting this running so fast, with fixes for the issues”

Sources

Topic Overview